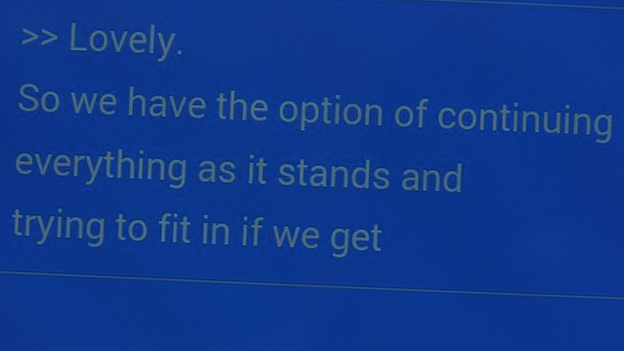

CART : Communication Access Real-Time Translation. Known as Speech-To-Text in the UK.

First off, hello. My name is Jenn Porto. I’ve been a CART writer for approximately 7 years. My purpose is to share my fly-by-the-seat-of-my-pants situations that I encounter on the job. This post doesn’t make me an expert and does not mean that I’ll always make the right decisions. I may say/do something that makes you wince. I’m okay with that. There is no rule book for being a CART writer, and because we work “alone” on the job, we rarely get to share these moments and get POSITIVE feedback. With that said, I am not always going to be grammatically correct according to Morson’s English Guide. This is an account of my day and my thoughts as they come to mind.

August 13, 2014

Where’s my Advil! I left my house at 8 a.m. for a 10 a.m. job with only 27 miles to drive. You do the math. Welcome to the evil beast we call the “Los Angeles freeways.” It’s a good thing I left so early! Full of anxiety, I exited the freeway at 9:27. Every thought I had was followed by, “I should be setting up by now!”

“There’s my parking structure!!!” Shoot, a line! There’s a gigantic sign that reads: Government vehicles only. “Well, this could be a problem.” Rechecking the agency’s instructions, I confirm that I’m at the correct structure. I see a metered spot on the street open. “Great! Well, that never happens in LA.” Get that spot! Shoot, I can’t make a U-turn because I’m surrounded by government buildings and every police car in the city is lurking about. Quickly pulling out of the line, merging three lanes over to the turn lane, squeezing behind a postal truck, turn, turn, legal U-turn, swoosh into my parking spot. I jumped out and rush to the meter and, “Curses! The meter is broken!” This explains why the spot was open. I can’t risk a parking ticket. I get back in my car and back to the darn structure and darn line. Third in line.

9:35’ish, “Hi. Jennifer Porto. Here for the City Planning meeting.”

With a heavy accent, the parking attendant responds, “City Plant.”

“Ummmm, yup.” WTH is City Plant? Don’t know. Don’t care. “Thank you.” Off I go down the rabbit hole to the basement of the structure. Park. Pulling out my Stenograph bag, bag with monitor, Tory Burch grown-up high heels, and away I go hustling through the garage to find the elevator. I’ve gotten lost every time I’ve parked in this labyrinth of cars, so I was relieved to see a businessman making the same mad dash I was. “Follow him.”

9:45, 15 minutes to go. Up the elevator. Press the third-floor button with authority as if to urge this toaster on pulleys to move faster. “It’s so hot in this elevator. Where is my rubber band? Screw my bouncy curls. This is what being in a toaster feels like! Focus, Jennifer.” Doors open. I’m out. Turn left, right, through the bridge connecting the buildings. “Crap, I don’t have time for a metal detector.” Slap my bags down on the table and wait patiently for the guard to look up from his phone. Now, one would think that since I’m in the building, things would start to get easy — WRONG!

9:50, 10 minutes to go. I found the correct room and tried to slip unnoticed through the door with my hands full of Stenograph luggage — not happening. Bang, bonk, bang. “Sorry.” I sit in the back and scan the room for my client. He’s not here. “Will I get lucky and he’s a no-show?” Fingers crossed!

10:00, go time. The client is still not here. I had set up my equipment in the back of the room taking up three empty seats. There was a pole blocking my view of the board members. Don’t care. My setup includes a Mira, Dell laptop that sits on a laptop stand, and an extra monitor set up next to me. Everything is plugged in. “Boo-ya, I’m ready to start!” No need to Duct take wires down to the carpet at this point; the power plug was right behind my chair. With a second to breathe, I casually walked to the side of the room to gather the board member’s names. I usually get prep before meetings, but not this time.

10:15, still no client. I’m double stroking the board member’s names to make my speaker ID’s. SKWREUPL/SKRWEUPL = JIM:. The door opens slightly and I can see my client. “Hmmm, why is he not coming in?” So it turns out that he had requested a sign language interpreter to voice for him, and for the third time an interpreter did not show up. I offered to voice for the client, as I’ve done with this client in the past. He types what he wants to say and I verbalize his text. It’s usually not a problem — usually.

10:20, the planning administrator now tells me with a pleading look in his eye, “I know you’re set up in this room, but we’re moving to room 1060. Okay?”

With a smile and nod, “You got it. Let me gather my stuff.”

With a double step, I gracefully bust through the door. I breakdown my setup, clink, bonk, stuff in bag, click, clink. Mira and tripod, laptop lid shut, laptop stand and tripod, pen and pad all get placed in my bag — let’s be real, thrown in my bag – monitor is in a separate bag. I’m out! “Really!? Of course 1060 is on the opposite side of the floor, of course it is!”

Setting up for the second time, I finally sit next to my client and prepare to start. Nope, he doesn’t like my font. He is not verbal, so he writes me a note: Separate font. Crap, I don’t know what he means. I’m already double spaced. I motion to him with my fingers: Bigger? Smaller? He nods on bigger. Okay, I use CaseCat software. I have about 30+ different templates of different sizes and fonts to switch to. I switch to Arial 26. I write, “Test test.” Nope, he says something that I don’t understand. I changed my template randomly to find one he liked. Verdana 15? Nope. This happens at least five more times. Oh, and the planning administrator is sitting with his face in his palm looking from me to his paperwork, to me, to his paperwork, and now to me! Finally, the client mutters something that I understand, “The original.” Back to Arial 26.

10:26, and we’re off. My fingers were shaky from the anxiety of the morning. I kept mixing up the speakers every time they would switch. Which one is he? Ben? No, Jim? No, Ben? NO! It’s Jim! “Get it together.” I couldn’t remember my brief for applicant or advisement. My pink blouse is now stuck to my back. I am sure I had the just-woken-up look with makeup under my eyes and a droopy ponytail. I was focused, “Write every word perfectly. No misstrokes.” The client was glued to my screen.

The planning administrator finally opened the forum for public comment. My client was reading every word I wrote. “Does anyone wish to make public comments?” After a long pause while he was reading, he shoots his hand up. Now comes the dance as we attempt to take turns with my laptop. He would be furiously pecking away to write his comments and the board members would start talking. Although, I couldn’t write what they were saying while my client was typing, because the real time would switch to the bottom of the screen and the client’s thought would be lost. I raised my hand to gesture a “pause.” I waited for my client to finish typing his response. Then I wrote what the board said. Then voiced what my client typed out. It was confusing. Thankfully, my client kept reiterating the same thing: He wanted a postponement due to not having a sign language interpreter.

One last thought, this was the third time a sign language interpreter did not show up and did not give notice. This is now three times a planning hearing for this matter has been set up a month out, the representatives from a HUGE well-known company have come prepared with experts and strategy, the planning administrator has conducted and postponed a planning hearing, a CART provider has been arranged, and the client shows up to debate this matter. How is it that a sign language agency has screwed up three times and not sent out an interpreter nor arranged a sub? Baffles my mind. Crap does happen, you get a sub, and you get the job done! Period! This is not a job where you can be lackadaisical and call out sick without arranging a sub. Please let this be a lesson to all of us: Get a sub. Oh, and leave your house more than two hours early, if you are driving on the beastly LA freeways, ha!

10:46, we adjourned.

Source: Jenn Porto

Press release

Press release