Breaking Barriers: Forensic Lipreading and the Bruce Lehrmann Case

“I had just become the first forensic lip reader to have an expert witness report accepted as reliable evidence in a major trial, making Australian legal history…”

“I had just become the first forensic lip reader to have an expert witness report accepted as reliable evidence in a major trial, making Australian legal history…”

Live captioning delivers an inclusive experience for the deaf, hard of hearing, and those who have English as a second language.

As a person is speaking, a speech-to-text-reporter (STTR) types out verbatim what is being said, with the transcript appearing on a screen for everyone to read.

The simple solution never interferes with what’s being said, while at the same time helps keep everyone in the loop with what’s going on.

Below are the three main places where live captioning should be used. Read more →

If you’re a student, do you find it hard to hear in lectures? Would you benefit from having live captioning of your lecture streamed to your smartphone or tablet in one second, and having a transcript immediately afterwards?

We are the first captions provider to provide live captioning services in South Africa. This is a fantastic achievement as there are so many barriers to deaf access in education, such as the lack of sign language interpreters. Real-time captioning of lectures is being very enthusiastically welcomed, it is seen as a real game-changer for deaf students.

Jody, one of our clients, wrote about her experience of live captioning in the classroom;

I have two cochlear implants and I am studying an Honours in Genetics this year.

121 Captions is a service provided that produces a live transcription of a class or conference whereby the person who needs the live transcription can read what it happening on his/her cell phone/tablet/laptop.

Currently, 121 Captions have been assisting me with my lectures every day since the beginning of April. As I listen to my lecturer, I read the live transcription from my cell phone, although a tablet is more ideal.

Without 121 assisting me, I wouldn’t have been able to fully understand my lectures as I tend to miss out when I listen. I also can’t remember things when I hear it, which makes it even more difficult. I am therefore able to remember everything I read, thus 121 also provides the transcription of the lecture at the end of the day for me to read in my own time and to study from.

It is one of the best services I have encountered, not only for people with a hearing disability but for anyone who needs a transcription of a class, lecture, conference or even a board meeting. Thank you 121 Captions for assisting me!

Check out the video to see how live captioning is set up in the classroom and used by students. If you would like to enquire about our live captions service, contact us or email us at bookings@121captions.com

I’m in the middle of a conference call with five other people, taking a brief on what they want me to write for their website.

One person is travelling on a train, two are in an echoey room, one has a strong Australian accent and the last one is in his car.

It’s hard enough for most people to hear everyone clearly in that situation. And I’m deaf.

But I have an advantage over everyone else. While I’m on the phone, the text of the conversation is appearing on my laptop in front of me. A captioner from 121 has joined the call and is typing the conversation for me to read – word for word in realtime.

I can ‘hear’ clearly what everyone is saying. I am able to interject with my thoughts or advice as needed, and, because the live captioning is so quick, I laugh at funny comments and witticisms at the same time as everyone else.

The team I work with know that a captioner is on the call and will watch, open-mouthed, as an accurate record of the conversation streams across my laptop screen.

But most of my colleagues don’t realise I use this facility. This is because the captioner joins the call well before it is due to start and will sit there patiently until the conference begins (though how they cope with the muzak for so long I don’t know!).

Another bonus of having the captioner is the transcript of the call I receive as soon as the call has finished. Obviously it’s not a formal record of what was said, but it’s a great contemporaneous note that I use to check my understanding of the conversation, and what the actions follow from the call.

And all this comes for free – well to me anyway. That’s because it’s one of the reasonable adjustments that Access to Work will accept and pay towards if an assessor confirms live captioning is what you need.

Live captioning from 121 has been a God-send for me. Running my own business with clients all round the country would be an incredible challenge without it. For the first time in a long time I am able to participate in phone calls, confident that I will ‘hear’ everything accurately and be able to contribute fully to the conversation.

Lisa Caldwell runs her own business as a copywriter at Ministry of Writing. She has severe/profound hearing loss after gradually losing her hearing over the last 8 years. You can get in touch with her at credocommunications@gmail.com

Author: William Mager | Original article: BBC News | Reproduced with kind permission of the author

There are many new technologies that can help people with disabilities, like live subtitling 24/7 for deaf people, but how well do they work?

Deaf people always remember the first time a new technology came on the scene, and made life just that little bit easier in a hearing world.

I’ve had many firsts. Television subtitles, text phones, the advent of the internet and texting all opened up opportunities for me to connect with the wider world and communicate more easily.

So when I first heard about Google Glass – wearable technology that positions a small computer screen above your right eye – I was excited. Live subtitling 24/7 and calling up an in-vision interpreter at the touch of a button. Remarkably both seemed possible.

That was a year ago. Since then, Tina Lannin of 121 Captions and Tim Scannell of Microlink have been working to make Google Glass for deaf people a reality. They agreed to let me test out their headset for the day.

First impressions are that it feels quite light, but it is difficult to position so that the glass lens is directly in front of your eye.

Once you get it in the “sweet spot” you can see a small transparent screen, it feels as though it is positioned somewhere in the distance, and is in sharp focus. The moment you get the screen into that position feels like another first – another moment when the possibilities feel real.

But switching your focus from the screen to what’s going on around you can be a bit of a strain on the eyes. Looking “up” at the screen also makes me look like I’m a bad actor trying to show that I’ve had an idea, or that I’m deep in thought.

The menu system is accessed in two ways. There is a touch screen on the side which can be swiped back and forth, up and down, and you tap to select the option you want.

Google Glass can be used for live subtitling

Or you can control it by speaking, but this can be difficult if you’re deaf. Saying “OK Glass” to activate voice commands can be a bit hit and miss if your voice is not clear, like mine.

One of the main problems is the “wink to take a picture” setting. But I wink a lot. I also blink a lot. So I turn that setting off.

After a few minutes of reading online articles via Glass, it’s time to test out live remote captioning software in the real world. Lannin and Scannell’s service is called MiCap, a remote captioning service that works on several platforms – laptop, tablet, smartphone, e-book and Google Glass.

We set up in a quiet meeting room. After some fiddling with wi-fi and pairing various devices, we put a tablet in the middle of the table as our “listener”, and put the headset on. As three of my colleagues engage in a heated discussion about the schedule for programme 32 of See Hear, the remote captioner, listening somewhere in the cloud, begins to transcribe what they are hearing.

My first reaction is amazement. The captions scrolling across the screen in front of my eye are fast, word perfect, with a tiny time delay of one or two seconds. It is better than live subtitling seen on television, not to mention most palantypists who convert speech to text. I can follow everything that is being said in the room. Even more impressively, this is the first time that the app has been tested in a meeting. I can look around, listen a bit, and read the subtitles if I miss something.

But after a while, tiredness overtakes excitement, and I take the headset off.

See Hear is broadcast on BBC Two at 10:30 GMT on Wednesdays – or catch up on BBC iPlayer. The new series begins on 15 October 2014.

The headset itself is uncomfortable and fiddly, but despite this my first experience of Google Glass was enjoyable. It doesn’t offer anything that I can’t already do on my smartphone but the ability to look directly at someone at the same time as reading the subtitles, does make social interaction more “natural”.

I am excited about the apps and software being developed by deaf-led companies in the UK. Not just remote captioning – also remote sign language interpreting. UK company SignVideo are already the first to offer live sign language interpreting via the Android and iOS platforms, and say that they’ll attempt a Google Glass equivalent in the future if demand is high enough.

Other companies such as Samsung and Microsoft are developing their own forms of smart glass and wearable technology and as the innovations reach the mainstream the range of applications which could help disabled people seems likely to grow.

There are lots of exciting tech firsts to come but I still prefer a more old-fashioned technology – the sign-language interpreter. They’re temperamental, and they might make mistakes too, but they’re fast, adaptable, portable – and they don’t need tech support when things go wrong.

Follow @BBCOuch on Twitter, and listen to our monthly talk show

Learn how to activate the Glass screen, respond to notifications and use some other basic features, all without using your hands.

One of our stenographers is writing real-time captions (CART, or verbatim speech-to-text) for a deaf surgeon in the USA who wears Glass. He uses an iPad with a microphone, and hangs it on the IV pole. The stenographer has special settings and a link for the Glass. It really is liberating for this client to have realtime captions as they work.

The first surgeries streamed using Google Glass were performed in June 2013.

Dr Grossmann, member of the Google Glass Explorer program, performed a world-premier surgery with Glass in the USA. This surgery was a PEG (Percutaneous Endoscopic Gastrostomy). The truly technological advance was to be able to stream the contents of a surgery to an overseas audience.

The second surgery was a chondrocite implant performed in Madrid, Spain, broadcasted to Stanford University. Dr Pedro Guillén streamed and consulted simultaneously a live surgical operation, enabling Dr. Homero Rivas – at Stanford University – to attend and provide useful feedback to Dr Guillén in real-time.

Dr. Christopher Kaeding, an orthopaedic surgeon at The Ohio State University Wexner Medical Center, used Google Glass to consult with a colleague using live, point-of-view video from the operating room. He was also able to stream live video of the operation to students at the University;

“To be honest, once we got into the surgery, I often forgot the device was there. It just seemed very intuitive and fit seamlessly.”

This was the first time in the US that Google Glass had been used during an operation and it was only used at a very basic level. Possible future uses of this technology could include hospital staff using voice commands to call up x-ray or MRI images, patient information or reference materials whilst they are doing their ward rounds. The opportunity to be able to get the information they need in an instant could have a significant impact on patient care. Another opportunity Google Glass offers is the ability to collaborate with experts from anywhere in the world, in real-time, during operations.

Clínica CEMTRO, in Madrid, is currently conducting a broad study including more than a hundred universities from around the world, to understand which could be the applications of Google Glass technology on e-health, tele-medicine and tele-education.

Credits: Ohio State University Wexner Medical Center, Clínica CEMTRO

Carla was sitting in London today, chatting online to our realtime captioner, Jeanette, in Kansas. Jeanette was about to dual output live captioning for a religious service to a television station in Arkansas and asked if Tina wanted to watch. You bet!

What is remote dual output live captioning?

Remote captions means the captioner is listening to a speaker in a different location and producing web-accessible captions. We use the internet and VOIP (typically Skype) to do this.

Jeanette called the Arkansas TV station over VOIP so she could hear their audio. Her computer equipment and CAT software connects to a modem or network through the internet and captions are embedded in the television’s signal which captions are then visible to the viewer watching a TV with a decoder built into the TV set. In the UK this is known as subtitles. In the US this is known as closed captions.

Today, Jeanette was using a dual output setup for live captioning. This means the captions can be streamed to different devices at the same time.

Jeanette was sending a signal to the Arkansas station from Kansas to send three lines of text to TV. The captions were also accessible for those watching TV via the internet; PLUS, this same live broadcast could be viewed as a live text stream by anyone in the world with an internet-connected device!

The idea of dual output allows a TV station to offer added value by streaming captions to those in emergency situations. For example, in the event of an emergency and the electricity goes off making live TV impossible, families can turn on their handheld devices and can still see internet TV and get the emergency closed captions. Isn’t that awesome?

Even more awesome, Carla is in London, watching live TV captions from an Arkansas TV station, originating from a captioner in Kansas. Doesn’t that just blow your mind!

Contact us if you would like to find out more about making your services accessible through remote dual output live captioning, email us at bookings@121captions.com

Our captioner Michelle tells us about a day in her life, captioning for everyone around her ….

We all know that every day in the working life of a captioner is different, and can be a challenge, and then there are days like Tuesday 26th November!

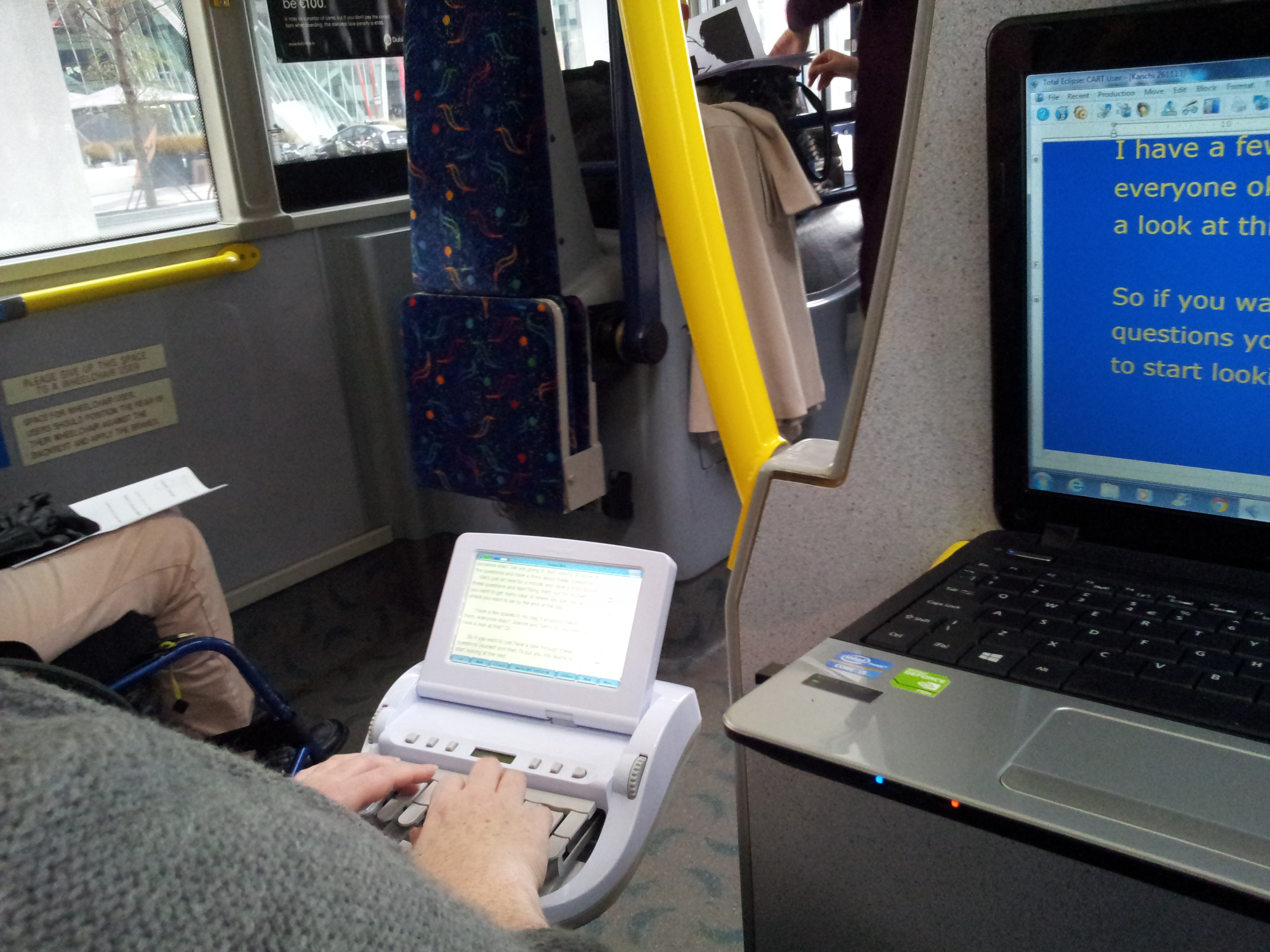

It began like any other day, with a booking for a regular client at a conference they were holding to discuss accessible tourism in Ireland, interesting! But then I was told we wouldn’t be needed till after lunch as the morning was being spent on an ‘accessible bus tour’ to some of the accessible sights of Dublin…hold on a minute though, if I’m there for access for the deaf/hard of hearing tourists, and I’m not needed, then how accessible is this tour going to be for them? So I asked how they’d feel if we tried to make the tour bus accessible? Without hesitation, we got a resounding yes – if you can do it, let’s go!

On the morning of the job I arrived at their office with laptops, screens, projectors, extension cables etc, I could see the perplexed expressions as they tried to work out how best to explain to me that they wouldn’t be able to plug in my extension lead on the bus, or indeed my projector! But once they were confident that that wasn’t my intention, and that I did really have some clue about what we were about to embark on, everyone relaxed 🙂

And I have to say, it was by far the most fun job I’ve done. Three double-decker Dublin buses pulled up outside the office, everyone was given a name tag and allocated a bus. The idea was that as the buses travelled between destinations the facilitator would lead the discussion and debate onboard and then in the afternoon all three busloads would feed back their information to the group at large.

As our bus was now equipped with live captioning (CART – Communication Access Realtime Translation), the occupants of the other buses could see what we were discussing, or joking about! The tour very quickly descended into a school tour mentality (we were even given some snacks) with lots of good natured joking, and one of our blind facilitators even scolded me for shielding my screen from him which meant he couldn’t copy my answers to the quiz 🙂

It soon became apparent that our driver was quite new to the concept of braking in a timely fashion and had probably never passed a pothole he didn’t enter! This being the case, I was finding it increasingly difficult to stay upright myself, and my machine, so with that in mind, the guys and gals on our bus decided to take bets on when the next bump in the road, traffic light etc would cause me and/or my machine to slip! It really lightened the mood, everyone had a laugh and it brought home to people in a very real and tangible way, that accessibility for everyone is not just a soapbox topic – but it became something that everyone on our bus played an active part in (even if some of them were “accidentally” bumping into me to get an untranslated word, and a laugh). But it showed that access matters, and that it should matter to us all!

What I didn’t know before that morning was that not only were we doing a tour on the bus, but we also had two stops; one at a brand new and very accessible hotel and one at a greyhound race track. Initially it was suggested that I would stay on the bus and not transcribe the tours, but where’s the fun in that? So, once we got off the bus, the bets turned to how many different positions they could get me to write in; standing; sitting; balancing on a bed; squatting; machine on a table, machine held by another tour member in the lift! – it was a truly interactive tour 🙂

And to finish the day off we went back to Guinness Storehouse for our panel discussion and debate about accessible tourism in Ireland (and free pints of Guinness of course).

All in all a brilliant day. An important topic discussed, debated, delivered and demonstrated in our different locations – the best job ever 🙂